Turning Subreddits Into Chatbots

This past weekend, I attended Art Hack Day: Echo Chamber. On Friday evening, the participants introduced ourselves and shared ideas; by Sunday evening, I had, as part of a group, created and exhibited Reddit-powered chatbots. It was an amazing, exhilarating weekend and I came out of it tired but more inspired than I thought possible.

(Art) Hack the Planet

PNCA -- my home for the weekend. credit

Art Hack Day (AHD) is a series of events that brings together tecchies and artists. Participants divide into ad hoc groups, work on art projects for a day and a half, and then the weekend culminates in an art show. This time around, we were in the uber-beautiful PNCA and sponsored in part by their interdisciplinary Make+Think+Code.

Part of what's lovely about AHD is that, as the name implies, they go out of their way to create a balance between art and tech. This isn't a hack day to make an app or redesign a website; it's a time to collaborate with people of different skill sets, push your boundaries, and create something interesting but not necessarily useful.

Do You Want to Build a Chatbot?

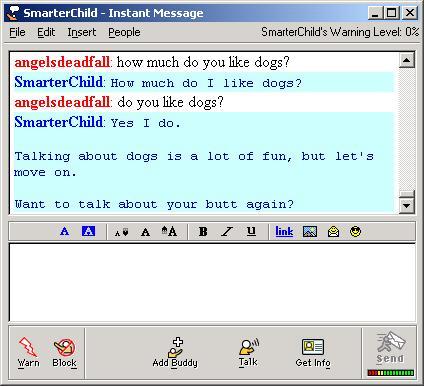

The theme of this AHD was "Echo Chamber", particularly with an eye towards the internet and social media, which easily become echo chambers that do little more than affirm our already-held beliefs. This creative prompt got me thinking about chatbots, which I've been into since I was introduced to SmarterChild in middle school.

A typical interaction with SmarterChild. source

On Friday evening, as we went around the room and introduced ourselves, a couple others indicated an interest in chatbots -- Alec Arme and Steve August. We got together and started brainstorming ideas, which ranged from assigning points to bots' arguments and picking a winner, to creating bots that represented different Greek gods. When we left for the night, we knew that:

- we wanted to make several bots, not just one;

- those bots would be trained or given personalities based on different data sources; and

- we wanted those bots to somehow talk to each other.

At this point, I should mention that none of us had experience with chatbots, and that none of us had worked together before. We have very different skill sets -- Alec works mostly with VR, Steve specializes in psychology and using tech to create calm, and I do web development and browser-based installations. Whatever our end product would be, we knew it wouldn't be typical.

Enter BigQuery

Any bot needs source material, and we had decided that our bots would be trained on internet communities that could be construed as echo chambers. Even before we were sure what the exact training material would be, we knew that we would have to process a lot of data in order to accurately reflect those communities.

When I think "big data", I think of Google BigQuery. I hadn't worked with it before, but I had had the pleasure of working alongside a team creating a BigQuery-powered trivia game, so I was already familiar with BigQuery as a service and knew the basics of how to use it.

Essentially, BigQuery is a pay-as-you-go service that lets you run SQL queries over humongous data sets in disgustingly little time. It opens the door to people like me, who want to crunch dozens of gigabytes of data, but don't have access to powerful machines.

As if this weren't enough, BigQuery hosts tons of publically available datasets, so we didn't have to manually write scrapes of comment sections or format those results to be BigQuery-compatible. (My favorite dataset is this 452GB one of DotA gameplay data).

The dataset that spoke to us the most was the one of all Reddit comments since 2005. Reddit, "the front page of the internet", is divided into subreddits dedicated to specific hobbies or interests. As such, they can easily become echo chambers where all participants agree with each other and validate each others' beliefs.

We decided to base our bots off of specific subreddits' comments from December 2016 to February 2017 -- a cool 52.9GB of data in all. But how would we turn this data source into usable material for chatbots?

Creating a Training Corpus

When I want to generate language, I immediately think of Markov chains. Also known as Markov models, these are processes that let us use statistics collected about an input to probabilistically generate an output.

You can create a Markov chain by going through a body of text ("training corpus") and counting how many times each word occurs, and for each word, what words follow it and how often. You create a profile of the text: how often can you expect a particular word to come up, and what other words you expect to follow it? You end up with a formula for creating utterances "in the style" of your training corpus.

To use subreddits as a training corpus, we ran the following SQL query in BigQuery against the aforementioned Reddit comment tables:

SELECT word, nextword, COUNT(*) c

FROM (

SELECT

pos,

word,

LEAD(word) OVER(ORDER BY pos) nextword

FROM (

SELECT

word,

pos

FROM

FLATTEN( (

SELECT

word,

POSITION(word) pos

FROM (

SELECT

SPLIT(REGEXP_REPLACE(LOWER(body), r'(\n)', r' '), ' ') word

FROM

[fh-bigquery:reddit_comments.2017_02], [fh-bigquery:reddit_comments.2017_01], [fh-bigquery:reddit_comments.2016_12] WHERE subreddit = 'dankmemes' LIMIT 10000

) ), word) ))

WHERE length(word) >= 1 and length(nextword) >= 1 and word != nextword

GROUP BY

1, 2

ORDER BY

c DESC

This is a modified version of the code provided by Felipe Hoffa on the GDELT blog. It reads in the specified tables ([fh-bigquery:reddit_comments.2017_02], etc.) and creates a new table of words, 'nextword's, and the count ('c') of that combination.

Training corpora were created by swapping out the subreddit we looked for -- WHERE subreddit = 'dankmemes' makes our query only count words in comments that had dankmemes listed as a subreddit. We cleaned up the data a bit to make processing easier -- words were all lowercased so we wouldn't have to deal with capitalization, and we stripped out line breaks.

We created nine training corpora this way, based on output tables ranging in size from 513KB to 7.16MB. Tolstoy's 864-page Anna Karenina, for comparison, takes up about 1.9MB. We had plenty of data; we were ready to make some bots.

Back to the Frontend

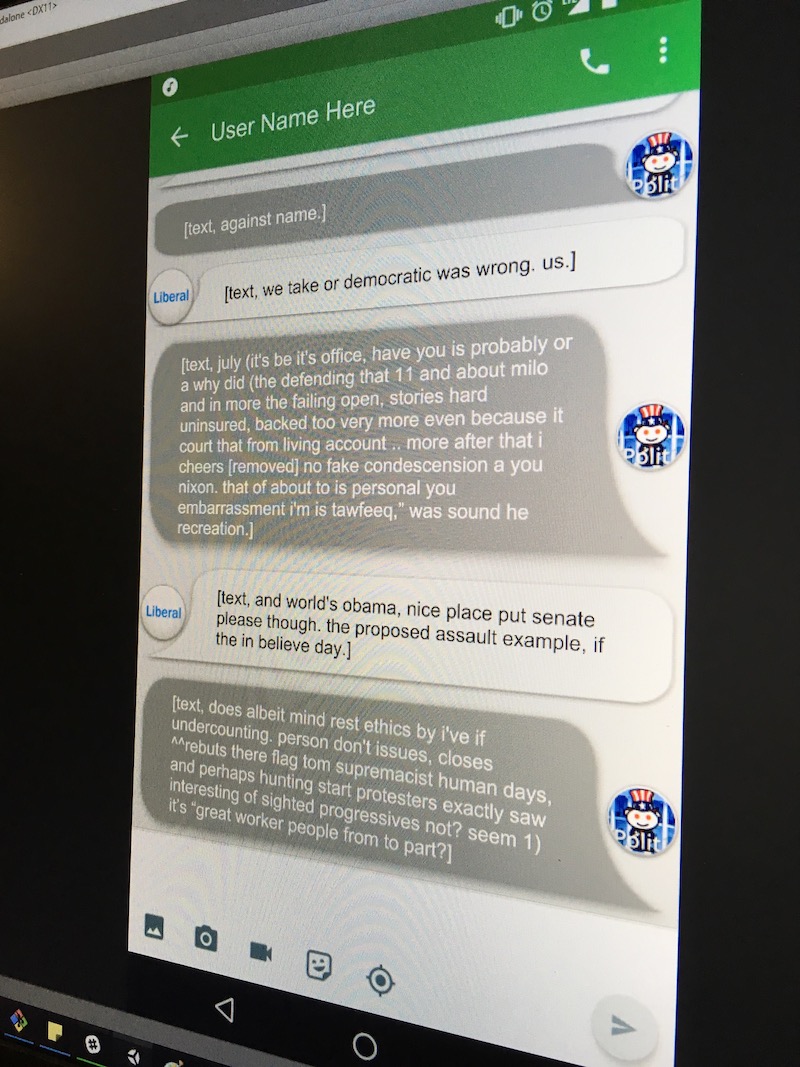

While I was getting the data and reworking it into a usable form, Alec and Steve were putting together our project's frontend. We had decided that we wanted to make something that looked like a chat app, and have our bots appear to text each other as they conversed.

Since the bulk of my work has been web-based, even my installations have leaned heavily on Google Chrome as a frontend. Alec, however, is most experienced developing with Unity, a game engine used widely for 3D games and Virtual Reality experiences. I associate Unity so tightly with those types of projects that I was astounded when Alec volunteered to make our 2D frontend in Unity. It turns out that Unity can also be a powerful way to make UIs and other 2D products, and that Alec knew a Unity plugin that would let us create a connection between his Unity frontend and my Node.js backend with WebSockets.

Alec and Steve teamed up on creating a frontend that mimicked an Android texting UI, complete with user avatars pulled from the subreddits' header images. We would randomly choose two bots and have them "converse" with each other. The conversation would go on for around twenty utterances per bot; then we would choose two new bots and start again.

It's alive! One of our first tests.

The frontend sent, via WebSockets, an event to the backend requesting an utterance from a specific bot. The backend would generate an utterance on the spot and send that text back. The frontend controlled the conversation flow; it would wait to request a new utterance based on how long the previous utterance would take to read.

We set up a router to let our laptops talk to each other via local IPs (the building's WiFi wasn't cooperating), and voilà -- we were able to run our first tests. The actual installation would have the server and frontend on the same computer; we wouldn't even need an internet connection.

Making Bots Chat

The last stage of the project was cleanup -- on the frontend, finessing assets and behavior; on the backend, stripping out badly-encoded characters (which appeared as �) and trying to get the bots to talk to each other.

So far we had been generating utterances apropos of nothing, probabilistically picking a starting word from a bot's corpus and building the rest of the utterance from there. Our goal, though, was to make the bots' texting feel like a conversation, which meant relating their utterances somehow. One way to do this is to relate utterances to predetermined "topics" and pick appropriate responses filed under that same topic. Steve had looked into achieving this with AIML, a markup language for chatbots, earlier on in the weekend. That approach, however, required a smaller, more manually-determined set of utterances than what we had on hand.

We decided it would be enough to get the bots to use the same word across utterances, even if we couldn't guarantee a match between their utterances' actual themes.

We started tracking every utterance in the conversation, ranking the words in an utterance from least- to most-used, assuming that the most common words ("a", "the", "I") are the least important to establishing a theme and that more unique words ("Iraq", "congress", "emails") are more indicative of an utterance's theme. When generating a new utterance, we would pick its starting word by trying to find one of the more "interesting" words from the previous utterance in the current bot's corpus. Some words were only known by certain bots, but more often than not, the desired word was known by both bots, albeit with different connotations.

Here's a sample exchange between two bots that demonstrates "staying on topic":

dankmemes: rights die.

The_Donald: die.

dankmemes: what yeah, dank [deleted] dank great?

The_Donald: yeah, oh the third was woman drones. is officials.

dankmemes: yeah, you bot, eating questions re-submit harder being ^^/r/ayylmao2dongerbot thrusting regret a the in actually methinks. basking that get brethren, this more to words.

The_Donald: yeah, oh so.. don't hate what that wtf!!!

Opening Night

AHD culminated in an art show taking over the ground floor and mezzanine of PNCA. Every group installed its project and set it up to be on display for several hours that night.

The diversity of skills brought into AHD meant that a huge variety of projects came out of it. There were Kinect and HoloLens projects; there was 16mm film and a dating app for animated monsters; there was a DDR dance pad that made emoji rain across a VR scene.

Our project was surprisingly easy to set up and ran beautifully all evening. We ran an exported Unity app on a Mac Mini and projected it into a fake smartphone cut out of foamcore.

Live at PNCA! From left to right: Steve, Alec, and me.

Visitors ebbed and flowed through the gallery. Since our project wasn't interactive, I had been afraid that people might not feel interested or engaged, but we ended up with a piece that still drew visitors in and held their attention. Part of the interest, I think, came from being able to see a very topical, polemic bot (like /r/The_Donald), or to see bots of opposing views (/r/Feminism and /r/TheRedPill, for example) matched against each other.

people ❤️ looking ❤️ at ❤️ our ❤️ art

I loved working on this project and am looking for ways to keep working with it. Maybe as a foray into machine learning?

Thanks to Alec and Steve for being amazing teammates and setting us all up for success on a super fun, challenging project. I look forward to lots more art-hacking in the future.

This was my first blog post that wasn't about making this blog! Stay tuned for a post about Markov chains.